The last 15 years has seen the fundraising industry advance at an almost light-speed pace. Organizations of every size and mission now understand the value of data and are invested in tracking and capturing it. Growth in technology, and talent has driven a rise in descriptive and predictive analytics, and reporting to create insight into donor activity. Digital activity, open and click thru rates, even A/B testing, common in the retail marketing space, are widely adopted approaches to drive activity and actions. I am a passionate supporter of all these approaches, and will continue to be, but they are rapidly approaching their functional ceilings. All of these crucial activities tell us what may happen, how to impact what may happen, and what did happen. What is lacking is a framework to answer a more fundamental question: why did it happen?

This paper will outline a framework to support greater understanding of donor engagement and philanthropic support using principles from behavioral economics. It will also outline slight modifications to widely adopted approaches in order to gain greater understanding into motivation through thoughtful, consistent, and well-crafted survey efforts. I hope this will inspire to you explore behavioral economics further.

Misbehavior

To inspire a new approach to understanding a donor’s relationship with a charity, it’s necessary to begin with very direct analysis of common approaches to using data in fundraising. Hilton Hotels, for example, seeks to engage and market customers and potential customers. They employ analytics to maximize immediate and lifetime customer value of that relationship (for Hilton), and have a robust reporting environment providing active strategic information for timely decision making. Lots of the techniques I mentioned, like analytics, reporting, data-capture, and so forth, have been adapted and borrowed from for-profit examples, like Hilton. But as non-profits, we must be aware about one significant difference from our for-profit brethren, a distinction that can alter the impact of these techniques.

From a traditional economic perspective, donating money to a charity is irrational.

From a basic economic perspective, donating money to a charity is crazy; it’s an irrational exercise. Money is exchanged for goods, services, or benefits agreed upon through market understanding and pricing. In fundraising, value is not commonly shared. If we find a chic penthouse in Manhattan for $10M, you and I may not enjoy the layout, location, or even have the ability to afford it—but we all generally agree the penthouse is worth $10M. Comparatively, if I donate $100K to my alma mater (University of Minnesota) it may have value for me, but little value for non-alumni or non-Gopher fans. There is not a “common cost/common benefit,” a unique characteristic that can impact analytics.

If I stayed at a JW Marriott, I am likely a strong potential consumer for a Waldorf (Hilton) property: there is a relational preference, even across brands. As a University of Minnesota donor, I have no interest in giving to other Big Ten Universities. In fact, I could never imagine supporting my rival with a gift. Think about your rival From a basic

economic perspective, donating money to a charity is crazy. college. Would you ever donate to them? Broadly, philanthropy is “non-transferrable” with respect to preference and is deeply rooted in personal experiences (school attendance, health care received, favorite local museum or animal adoption agency used).

These examples are not meant to discourage anyone from developing and leveraging analytics—it is to highlight the unique psychology of our “market place” and utilize these techniques in a more impactful manner. There are dozens of principles to explain human behavior within behavioral economics. Here are three which I believe are particularly relevant to understanding behaviors of donors:

- Anchoring

- When confronted with an unknown or new situation, we like to anchor price or value in either situations previously known to us (experience) or rely upon recommendations. In philanthropy, you can see this in the “suggested gift amount” in a direct mail piece or online giving page. There is always an option for “other,” but the solicitation is priming you with amounts, and most donors choose what is “socially suggested.”

- Social Proof or Herding

- People often have a tendency to look for cues in the behavior of others, particularly those they know well (friends and family) for signaling on how to respond to a situation. This helps explain the phenomenon of crowd funding or fundraising ambassadors. If I see a fellow alum make a pledge, it can impact my decision making.

- Self-Signaling

- People tend to strive and take actions towards their “idealized” self, absent recognition from others. This is why we recycle or return a lost wallet. Recognition or benefits are sometimes, but not often, motivators for philanthropy.

What About Satisfaction?

Given that we have established that philanthropy occupies a related but unique place in psychology and economics—how can we create greater understanding? If we only had 50 constituents, 30 supporters, and 3 large supporters, we could simply and reasonably hold conversations with all of them. More practically, the scale of constituency of anyone reading this is far beyond that—and that’s why we use analytics: it provides human insight, at scale.

The analytics I propose is not as technically sophisticated as the for-profit techniques highlighted earlier. We are not looking to measure or count or to predict an outcome: we are seeking to understand the “why,” of motivation, or engagement. Action, or inaction, after all, is most often an expression of our motivation, engagement, and desire.

The most practical way to measure, and hopefully understand engagement, for a large group of people is through thoughtful, consistent, and frequent survey work. I can imagine the eye rolls of the reader, thinking, “Oh great, another survey. We do lots of surveys!” And yes, many organizations do engage in robust and extensive survey efforts. But the reality is the significant majority of these efforts that I have counseled clients upon are related to finite opinions about programs, recognition opportunities, perspectives on campaign priorities, or satisfaction. These are all, in their own way, simply measurements of activity, action, and behavior. What they are not measuring is fundamental engagement.

A satisfaction survey is one I am most frequently presented with—as a measure of engagement—and when presented I offer this relatable story. There is a gas station near where I live that is easy to get to, open 21 hours of the day (closed 2–5 am), and seems reasonably clean with friendly staff. I am satisfied with my experiences there—but I am not engaged. I am not blogging or posting on Facebook about this gas station. I am not, when I need gas, driving past other gas stations to simply give them my business. I have positive feelings about them when it relates to specific actions, but remain wholly neutral when it comes to the question “could you imagine a world without this brand?” If the gas station did a satisfaction survey I would suspect “very satisfied” would be somewhere near 75%. “Very engaged” however, may be closer to 15-20%. I would never suggest a client desist with their satisfaction survey efforts—they have significant value. Just be careful to not misinterpret satisfaction as engagement.

Capturing a Feeling

How can we capture engagement? Gallup (where I previously worked) has long been a leader in engagement methodology, and I have found their core principles fit very well in our space. I recommend organizations develop a very limited (eight questions or less) survey instrument which they send to everyone quarterly to measure changes.

Question phrasing is important, and the best engagement surveys often ask things we may feel slightly uncomfortable phrasing, like, “University of Minnesota is a non-profit for people like me.” They are capturing broad, thematic opinions that may be more ephemeral but are foundational to action and inaction.

Understanding constituents on the “margin” of support may have the greatest impact.

With engagement measurement, I always strongly recommend clients survey as many people as possible—segmentation can be done later, as needed. When I share this approach, most often I get a confused silence, stares, and sometimes slight push back against this idea. “We survey top donors” is a common reply. The engagement of top donors shouldn’t be a mystery, that’s why they are top donors. Constituents on the “margin” of support, non-donors, infrequent, lapsed, etc. are those where understanding of how they feel may have the greatest potential fundraising impact to an organization. Additional benefits of brief, regular, “engagement checks” is that it allows for a current “score card” of engagement efforts. If we use a traditional Likert scale measure of 1-5, and donor engagement was 4.1 at the end of 2018, a movement to 4.3 or even 4.2 is significant. By consistently asking the same core questions, not related to moments, but to fundamental feelings, there is hardly any tactic, strategy, or investment that cannot have its greater impact measured.

Say-Do Paradigm

Another principal of behavioral economics is that people often have opinions and feelings that run counter to their actions or activity; some refer to this as the “say-do” or “feel-do” paradigm. That is—people express sentiments but act in ways that are directly opposed. Every constituency has a prominent group that would meet this description—my anecdotal experience across more than twenty clients is this group is in the 20%-25% range. If we accept that this happens, we can first identify who they are, what they feel, and what they do: basic behavioral economics and survey methods can help us with that. Once identified, the next step past “who” is “why?” and “how can we change?” What I am merely is how to identify these “constituent-conundrums,” so we are presented with an opportunity to effect a change.

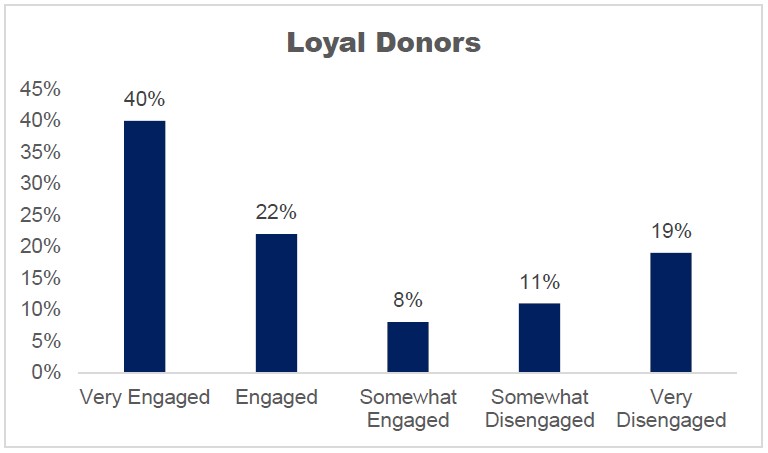

The most direct way to identify these constituents isn’t technically sophisticated or revolutionary, its just simple data manipulation: align what people say, with what they do. Using email as an “ID” we can link perspectives to behaviors. In 2018, I used this approach with a client, and we found something surprising to all involved: 30% of Loyal Donors, those who have given every year over the last 5 years, felt somewhat or very disengaged.

Our project was not designed to answer the question “why” and “how” to change, but isolating constituents who dutifully support you, yet feel distant from your organization, proved a key insight and priority for further study. The organization now knows who these “say-do” donors are.

Creating Action

Some concepts I shared may be new to you, some may be variations of things you currently employ or find value in—so where should you go from here? I recommend three steps:

- Review your survey efforts and ask candidly: “are we asking about engagement, or are we asking about satisfaction.” Revise as you feel necessary.

- Merge action and activity with survey information: what people say they feel vs. what we recognize they do. This alignment is the second foundational step to develop a framework to understand alignment, and the opportunities that lie within the misalignment in your constituency (donors who love you yet are not engaged or constituents who are active yet do not feel connection).

- Consider your most confounding, tricky, or mysterious significant donor population. This is likely a great candidate for a targeted assessment of behavior through a behavioral economics perspective.

Through a different lens, with some simple, thoughtful applications of survey methods and analytics, the answers to unlocking the question “why” are before us.